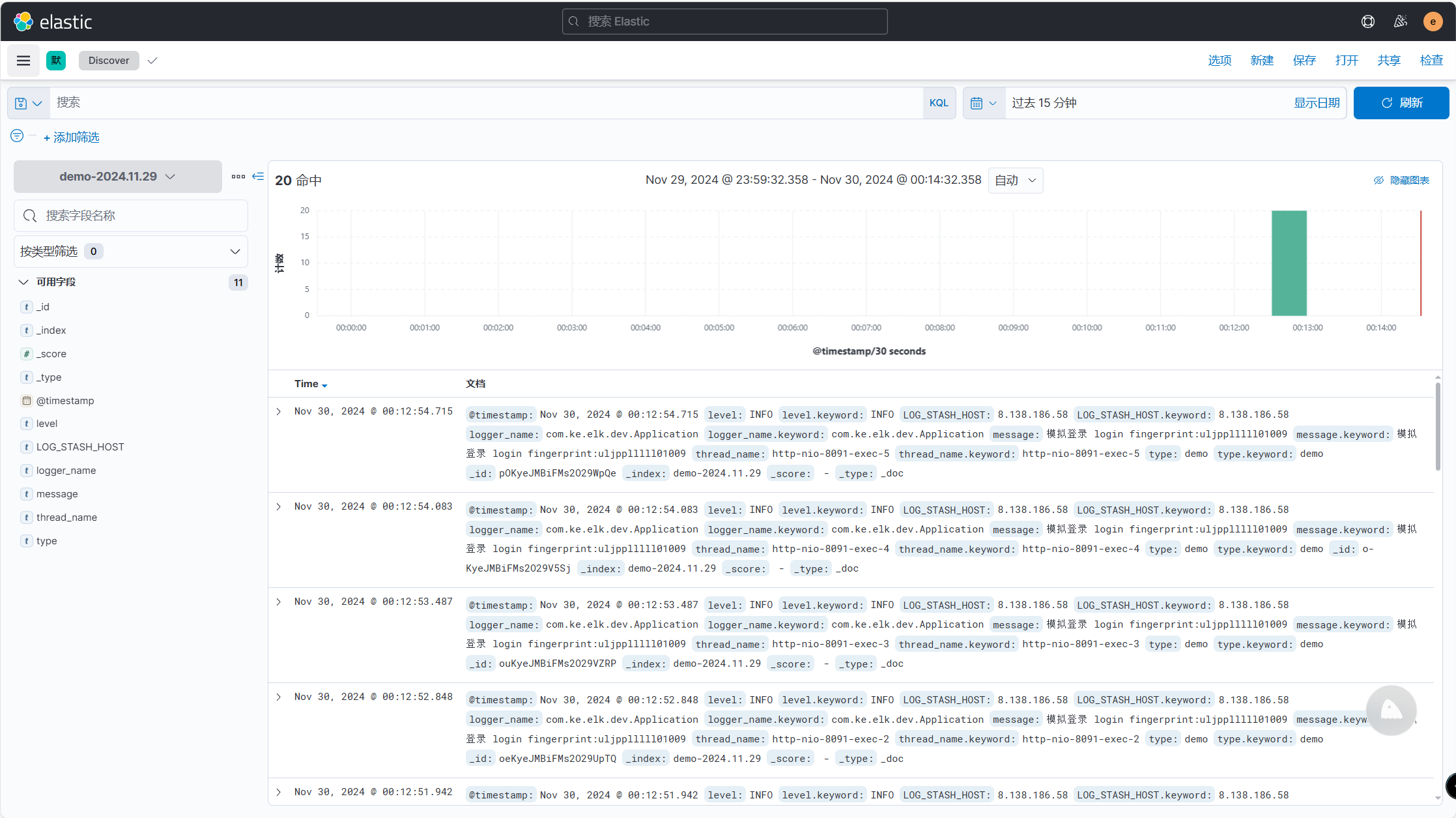

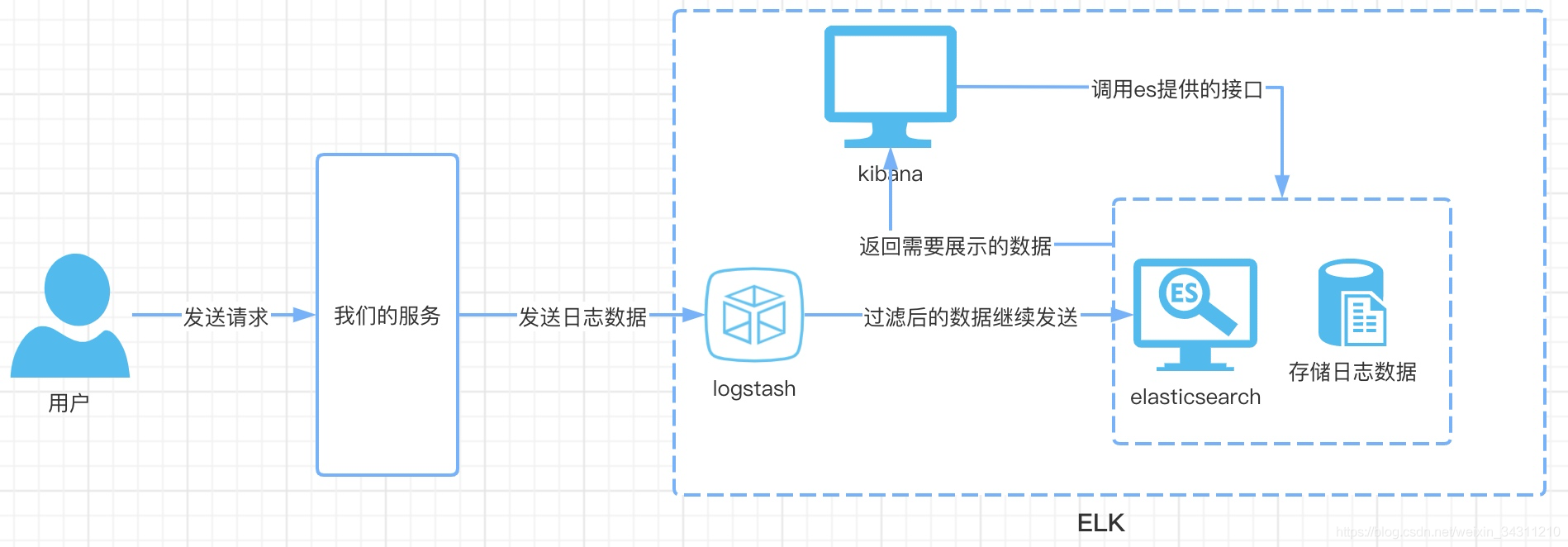

ELK日志采集

大约 6 分钟

架构原理图

- 阿里云 4CPU 8GB 服务器一台

- 在云服务器上部署好docker

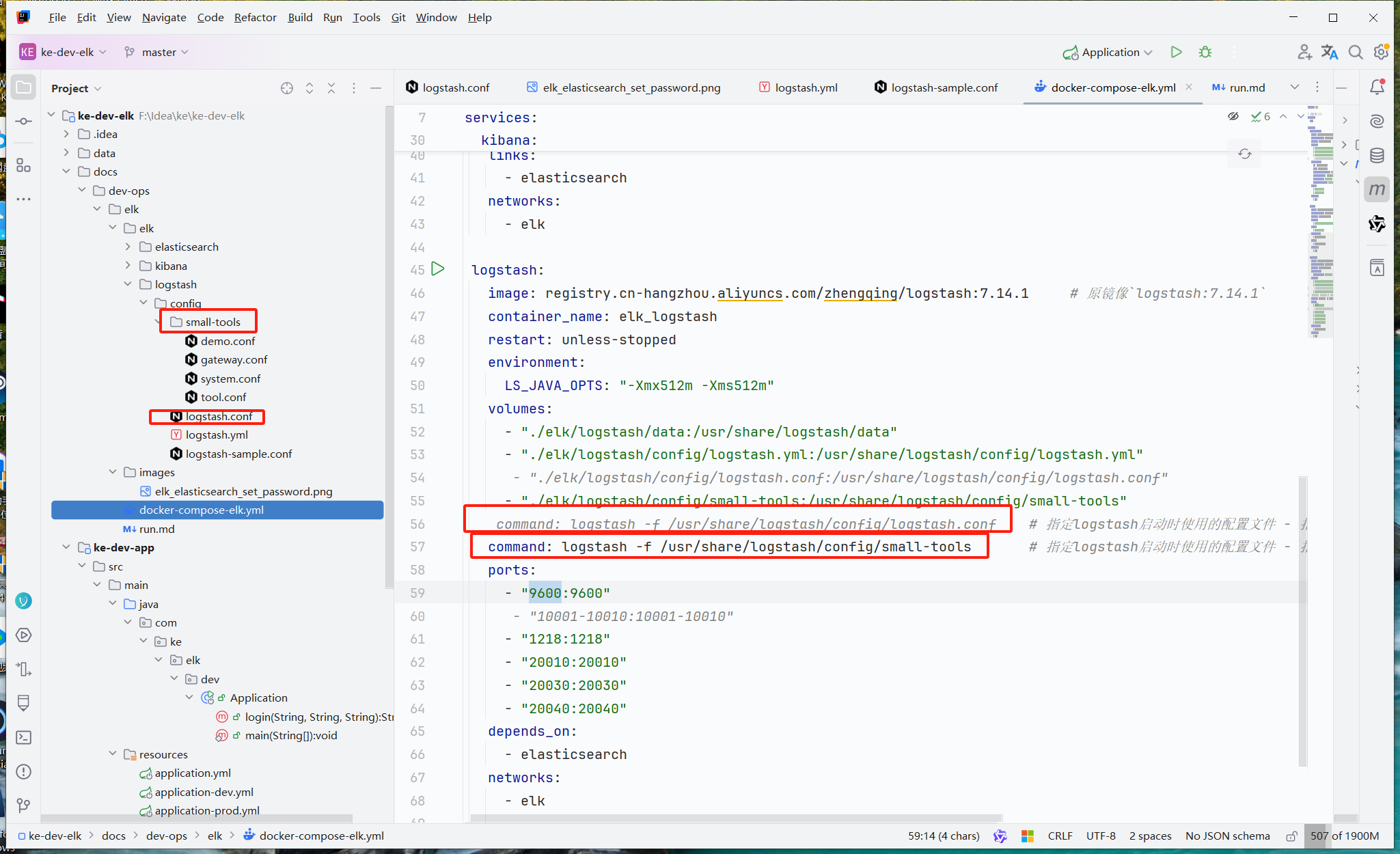

logstash配置

配置可选

version: '3'

# 网桥elk -> 方便相互通讯

networks:

elk:

services:

elasticsearch:

image: registry.cn-hangzhou.aliyuncs.com/zhengqing/elasticsearch:7.14.1 # 原镜像`elasticsearch:7.14.1`

container_name: elk_elasticsearch # 容器名为'elk_elasticsearch'

restart: unless-stopped # 指定容器退出后的重启策略为始终重启,但是不考虑在Docker守护进程启动时就已经停止了的容器

volumes: # 数据卷挂载路径设置,将本机目录映射到容器目录

- "./elk/elasticsearch/data:/usr/share/elasticsearch/data"

- "./elk/elasticsearch/logs:/usr/share/elasticsearch/logs"

- "./elk/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml"

# - "./elk/elasticsearch/config/jvm.options:/usr/share/elasticsearch/config/jvm.options"

environment: # 设置环境变量,相当于docker run命令中的-e

TZ: Asia/Shanghai

LANG: en_US.UTF-8

TAKE_FILE_OWNERSHIP: "true" # 权限

discovery.type: single-node

ES_JAVA_OPTS: "-Xmx512m -Xms512m"

ELASTIC_PASSWORD: "123456" # elastic账号密码

ports:

- "9200:9200"

- "9300:9300"

networks:

- elk

kibana:

image: registry.cn-hangzhou.aliyuncs.com/zhengqing/kibana:7.14.1 # 原镜像`kibana:7.14.1`

container_name: elk_kibana

restart: unless-stopped

volumes:

- "./elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml"

ports:

- "5601:5601"

depends_on:

- elasticsearch

links:

- elasticsearch

networks:

- elk

logstash:

image: registry.cn-hangzhou.aliyuncs.com/zhengqing/logstash:7.14.1 # 原镜像`logstash:7.14.1`

container_name: elk_logstash

restart: unless-stopped

environment:

LS_JAVA_OPTS: "-Xmx512m -Xms512m"

volumes:

- "./elk/logstash/data:/usr/share/logstash/data"

- "./elk/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml"

# - "./elk/logstash/config/logstash.conf:/usr/share/logstash/config/logstash.conf"

- "./elk/logstash/config/small-tools:/usr/share/logstash/config/small-tools"

# command: logstash -f /usr/share/logstash/config/logstash.conf # 指定logstash启动时使用的配置文件 - 指定单个文件

command: logstash -f /usr/share/logstash/config/small-tools # 指定logstash启动时使用的配置文件 - 指定目录夹(系统会自动读取文件夹下所有配置文件,并在内存中整合)

ports:

- "9600:9600"

# - "10001-10010:10001-10010"

- "1218:1218"

- "20010:20010"

- "20030:20030"

- "20040:20040"

depends_on:

- elasticsearch

networks:

- elk

springBoot集成

引入依赖

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>7.3</version>

</dependency>

配置文件

server:

port: 8091

tomcat:

max-connections: 20

threads:

max: 20

min-spare: 10

accept-count: 10

# 日志

logging:

level:

root: info

config: classpath:logback-spring.xml

# TODO logstash部署的服务器IP

logstash:

host: 8.138.186.58

采集日志配置

<?xml version="1.0" encoding="UTF-8"?>

<!-- 日志级别从低到高分为TRACE < DEBUG < INFO < WARN < ERROR < FATAL,如果设置为WARN,则低于WARN的信息都不会输出 -->

<configuration scan="true" scanPeriod="10 seconds">

<contextName>logback</contextName>

<!-- name的值是变量的名称,value的值时变量定义的值。通过定义的值会被插入到logger上下文中。定义变量后,可以使“${}”来使用变量。 -->

<springProperty scope="context" name="log.path" source="logging.path"/>

<!--LogStash访问host-->

<springProperty name="LOG_STASH_HOST" scope="context" source="logstash.host" defaultValue="127.0.0.1"/>

<!-- 日志格式 -->

<conversionRule conversionWord="clr" converterClass="org.springframework.boot.logging.logback.ColorConverter"/>

<conversionRule conversionWord="wex"

converterClass="org.springframework.boot.logging.logback.WhitespaceThrowableProxyConverter"/>

<conversionRule conversionWord="wEx"

converterClass="org.springframework.boot.logging.logback.ExtendedWhitespaceThrowableProxyConverter"/>

<!-- 输出到控制台 -->

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<!-- 此日志appender是为开发使用,只配置最底级别,控制台输出的日志级别是大于或等于此级别的日志信息 -->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>info</level>

</filter>

<encoder>

<pattern>%d{yy-MM-dd.HH:mm:ss.SSS} [%-16t] %-5p %-22c{0}%X{ServiceId} -%X{trace-id} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<!--输出到文件-->

<!-- 时间滚动输出 level为 INFO 日志 -->

<appender name="INFO_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<!-- 正在记录的日志文件的路径及文件名 -->

<file>./data/log/log_info.log</file>

<!--日志文件输出格式-->

<encoder>

<pattern>%d{yy-MM-dd.HH:mm:ss.SSS} [%-16t] %-5p %-22c{0}%X{ServiceId} -%X{trace-id} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

<!-- 日志记录器的滚动策略,按日期,按大小记录 -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- 每天日志归档路径以及格式 -->

<fileNamePattern>./data/log/log-info-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>100MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<!--日志文件保留天数-->

<maxHistory>15</maxHistory>

<totalSizeCap>10GB</totalSizeCap>

</rollingPolicy>

</appender>

<!-- 时间滚动输出 level为 ERROR 日志 -->

<appender name="ERROR_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<!-- 正在记录的日志文件的路径及文件名 -->

<file>./data/log/log_error.log</file>

<!--日志文件输出格式-->

<encoder>

<pattern>%d{yy-MM-dd.HH:mm:ss.SSS} [%-16t] %-5p %-22c{0}%X{ServiceId} -%X{trace-id} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

<!-- 日志记录器的滚动策略,按日期,按大小记录 -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>./data/log/log-error-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>100MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<!-- 日志文件保留天数【根据服务器预留,可自行调整】 -->

<maxHistory>7</maxHistory>

<totalSizeCap>5GB</totalSizeCap>

</rollingPolicy>

<!-- WARN 级别及以上 -->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>WARN</level>

</filter>

</appender>

<!-- 异步输出 -->

<appender name="ASYNC_FILE_INFO" class="ch.qos.logback.classic.AsyncAppender">

<!-- 队列剩余容量小于discardingThreshold,则会丢弃TRACT、DEBUG、INFO级别的日志;默认值-1,为queueSize的20%;0不丢失日志 -->

<discardingThreshold>0</discardingThreshold>

<!-- 更改默认的队列的深度,该值会影响性能.默认值为256 -->

<queueSize>8192</queueSize>

<!-- neverBlock:true 会丢失日志,但业务性能不受影响 -->

<neverBlock>true</neverBlock>

<!--是否提取调用者数据-->

<includeCallerData>false</includeCallerData>

<appender-ref ref="INFO_FILE"/>

</appender>

<appender name="ASYNC_FILE_ERROR" class="ch.qos.logback.classic.AsyncAppender">

<!-- 队列剩余容量小于discardingThreshold,则会丢弃TRACT、DEBUG、INFO级别的日志;默认值-1,为queueSize的20%;0不丢失日志 -->

<discardingThreshold>0</discardingThreshold>

<!-- 更改默认的队列的深度,该值会影响性能.默认值为256 -->

<queueSize>1024</queueSize>

<!-- neverBlock:true 会丢失日志,但业务性能不受影响 -->

<neverBlock>true</neverBlock>

<!--是否提取调用者数据-->

<includeCallerData>false</includeCallerData>

<appender-ref ref="ERROR_FILE"/>

</appender>

<!-- 开发环境:控制台打印 -->

<springProfile name="dev">

<logger name="com.nmys.view" level="debug"/>

</springProfile>

<!--输出到logstash的appender-->

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<!--可以访问的logstash日志收集端口-->

<destination>${LOG_STASH_HOST}:20040</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

</appender>

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="ASYNC_FILE_INFO"/>

<appender-ref ref="ASYNC_FILE_ERROR"/>

<appender-ref ref="LOGSTASH"/>

</root>

</configuration>

编写测试

package com.ke.elk.dev;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Configurable;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.RestController;

@Slf4j

@SpringBootApplication

@Configurable

@RestController()

@RequestMapping("/api/ratelimiter/")

public class Application {

public static void main(String[] args) {

SpringApplication.run(Application.class);

}

/**

* curl http://localhost:8091/api/ratelimiter/login?fingerprint=uljpplllll01009&uId=1000&token=8790

*/

@RequestMapping(value = "login", method = RequestMethod.GET)

public String login(String fingerprint, String uId, String token) {

log.info("模拟登录 login fingerprint:{}", fingerprint);

return "模拟登录:登录成功 " + uId;

}

}

以上的工程项目地址:https://gitee.com/keyyds/ke-dev-elk

启动测试

启动项目之后进行访问测试

测试接口

http://localhost:8091/api/ratelimiter/login?fingerprint=uljpplllll01009&uId=1000&token=8790

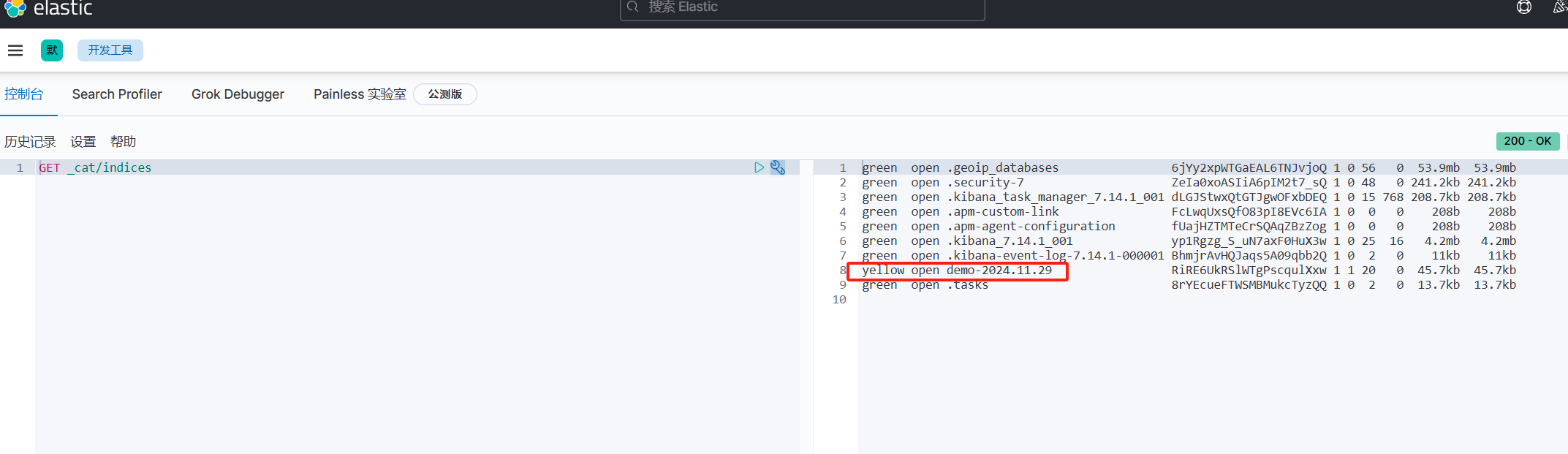

查看到spirngBoot项目推送上来的日志索引。

- 创建索引, 记得要选到demo-xxxxx 这个索引

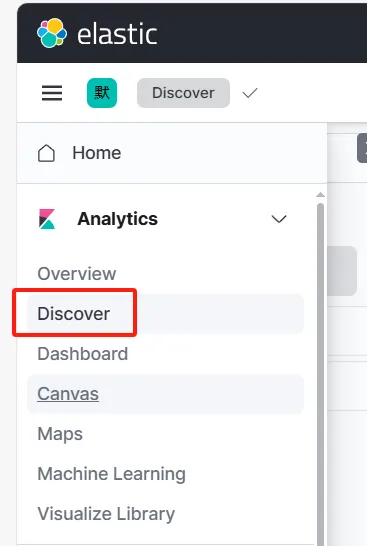

- 查看日志